Dynamo Paper Review

Dynamo: Amazon’s Highly Available Key-value Store

Buzzwords

Highly decentralized, loosely coupled, service-oriented architecture

Consistent Hashing

- virtual nodes

Quorum-like technique

- R + W > N

Decentralized replica synchronization protocol

anti-entropy (replica synchronization) protocol

a big word for synchronizing two replicas

Merkle tree

Object versioning

syntactic reconciliation

new versions subsume the previous version(s), and the system itself can determine the authoritative version

semantic reconciliation

the reconciliation in order to collapse multiple branches of data evolution back into one

vector clock

Gossip-based distributed failure detection and membership protocol

Eventually-consistent storage system

Service level agreements(SLA)

Application level update conflicts resolution

Preference list

- The list of nodes that is responsible for storing a particular key

Coordinator

Hinted Handoff

Stage event-based architecture(SEDA)

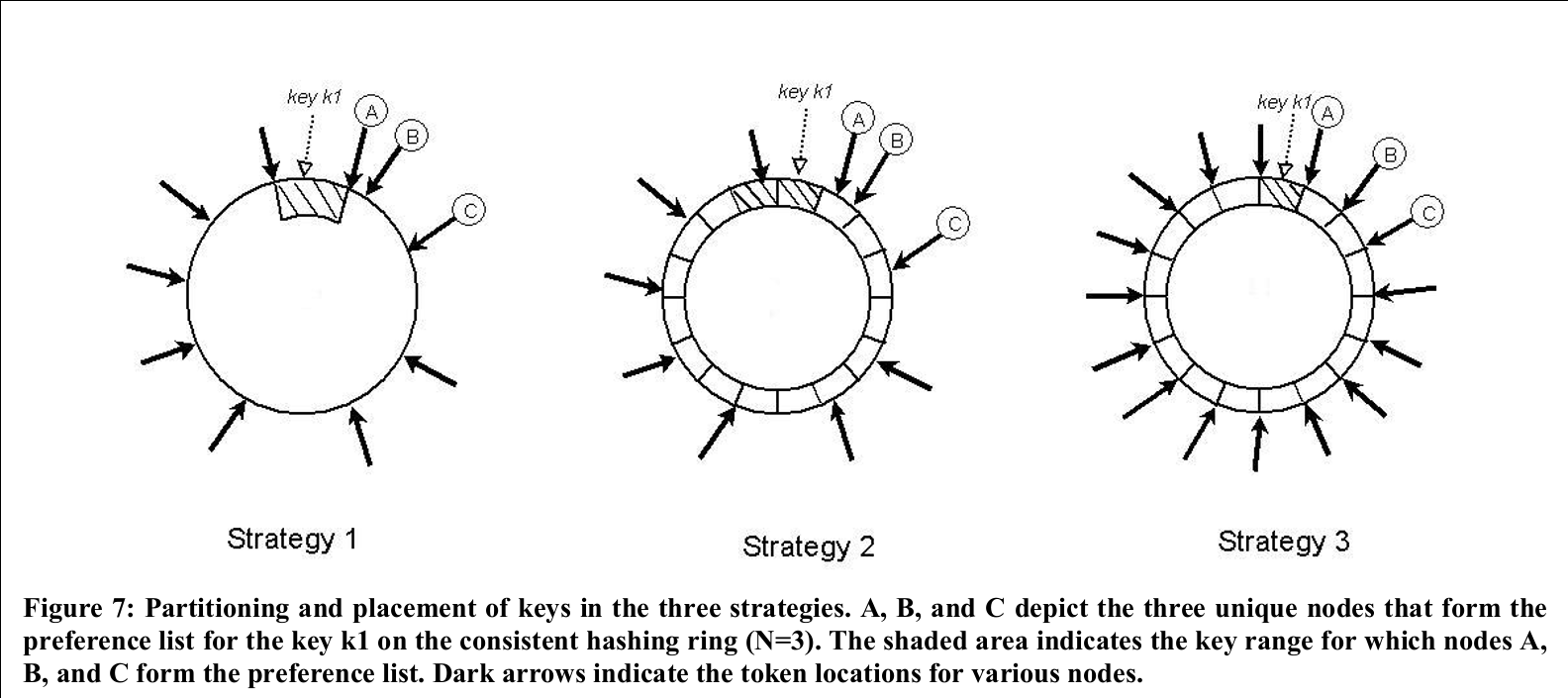

Dynamo’s partitioning scheme’s three stages

T random tokens per node and partition by token value

T random tokens per node and equal sized partitions

Q/S tokens per node, equal-sized partitions

Coordination

Client-driven

Server-driven(load balancer)

Summary

The paper introduces an eventually consistent distributed, application-level conflicts resolution K/V database which focuses that write never fails called Dynamo. Dynamo is much like PNUTS with eventual consistency while PNUTS uses per-record-timeline consistency to ensure one order. Moreover Dynamo is much like Bayou with its application-level resolution while Bayou may rollback writes.

Dynamo is built for Amazon’s e-commerce platform which guarantees service level agreements purely targeting at controlling performance at the 99.9th percentile. So Dynamo mainly focuses on the performance and availability(“always writable”) instead of consistency and durability.

The paper introduces the architecture in several core components:

- Partition && Replication

The Dynamo uses consistency hashing to partition the storage with

virtual nodes for uniform distribution. Moreover, the each hashed key is

replicated on several successor nodes and all nodes contain the key are

called perference list of the key. The coordinator (who accept the

put/get from clients) then forwards the all from clients to the nodes

in the list. The consistent hashing is decentralized and almost balanced

due to virtual nodes, while data may need to be shift to other nodes when

new nodes join/leave the system. Dynamo treats all failure as temporary

and only make changes when explicitly add/remove nodes and the operation should take

a long time.

- Data Versioning

Because of eventual consistency and always writable requirements,

divergent replicas need to be resolved. Dynamo allows multiple versions

between the replicas and use vertor clock and application level

resolution to resolve the conflicts. For example, the case of shopping

cart uses union which may result in no lost ‘add to chart’ while may

cause deleted one to resurface.

- System API

Dynamo only supports put/get. There are two ways of routing. The first

one is using load balancer, which transfer the request to load

balancer, and load balancer transfer it to a random node, and then

the node will forward according to the preference list. The second one is

just linking it to a client library which will transfer the request to the

appreciate node.

Dynamo uses sloppy quorum and hinted handoff to achieve always

writable requirements.

The sloppy quorum means that with R(node reply to the client only R

replicas replies), W(node reply to the client only W-1 replicas replies),

N(first N reachable nodes in reference list) and quorum rule that R + W

> N, there will be an overlap between R and W, so Dynamo can promise

consistency.

The hinted handoff means that when first N nodes in the reference list are

unreachable, we may need to use other nodes to record the temporary data

and transfer the data when the node is reachable again. This technique

may result in some inconsistency while improving the availability.

Due to failure, replicas may become unsynchronized, Dynamo uses the

Merkle tree (hashing based tree) and gossip protocol as the anti-entropy

protocol, which can reduce the data necessary for transfer.

- Membership

According to the paper, a gossip-based protocol propagates membership changes and maintains an eventually consistent view of membership. So some inconsistency may be introduced. For example, the deleted server might get puts meant for its replacement and added server might miss some puts because not known to the coordinator.

- Design experience

Dynamo’s consistent hashing design experiences evolution. The old scheme is randomly partitioned which requires the scan(O(n)) to split the range of the nodes. And the new scheme just uniformly divided the circle into Pre-determined set, and transfer the ranges for simplicity and performance.

Strength of the paper

An awesome system that makes use of lots of algorithms aimed at special questions and mixes them smoothly to build Dynamo.

The always writable model is meaningful to the application such as Amazon due to SLA for the 99.9th percentile.

Nice experience introduction of how and why design evolves.

Weakness of the paper

- Comparison between the design of P2P and single master may be necessary for why amazon decentralized architecture.